|

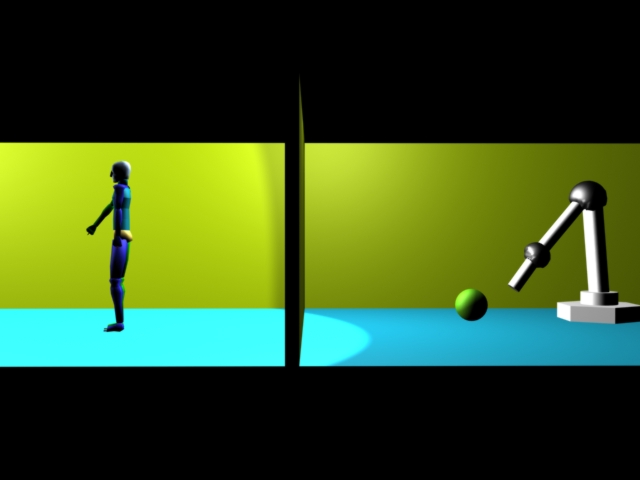

In the

long term, the main goal of our research in the ViGIR Lab is to build an

augmented and virtual reality deck (or, as I call it, a Holodeck) where

robotic systems can interact with and learn from humans by observing their

actions. The learning part will take place at the same time as the human

demonstrations are taking place in the augmented reality environment. That

is, in these so called Holodecks, humans will perform tasks such as: the

tedious assembly of industrial parts; rescue, maintenance, and exploration

in hazardous environments such as deep seas, space exploration; etc. All of

this by interacting with safe computer generated representations of these

environments. (see holodeck1.jpg)

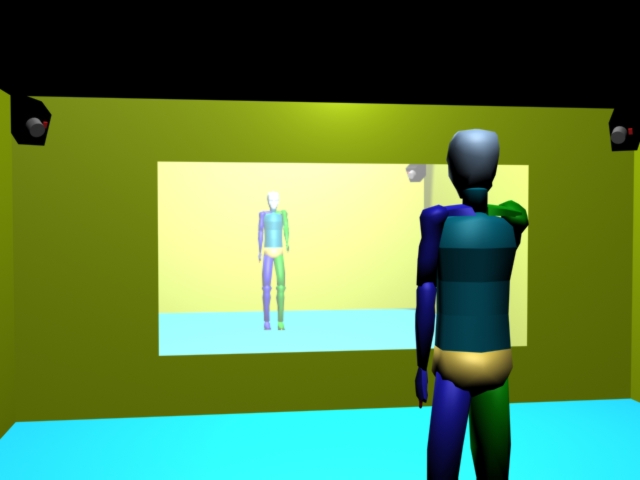

In order to do that, inside the

Holodeck vision systems will capture the motions of heads, legs, arms,

hands, and fingers and "transfer" these motions to actual robotic

counterparts -- mobile robots, industrial robots, etc. These robotic

counterparts will perform the desired task, which may be also simply

mimicked by the robots so they can be reproduced more precisely and a large

number of times.

Note that humans will perform the

tasks in the augmented reality environment exactly as if they were at the

real location. The human motions will then be analyzed by real and virtual

cameras that will capture and present the motions from different vantage

points. The learned motions will then be used to establish control set

points for real robotic execution of the same task in the real environments

with the help of multi-stereo tracking and visual servoing.

Most of the research projects below

are related to the construction of this Holodeck.

More details to come ...

Details:

- PowerPoint Presentation

- Website

|