|

Target Tracking from Airborne Video by

Kyng min Han

Motivation

Reconnaissance and surveillance in urban environments is a

challenging problem especially because of the usual limited

visibility, the complexity of background activity, etc.

These problems can be further worsened if the source of the

video imagery is airborne. In that case, the motion of the

camera increases the background activity and tasks such as

segmentation of target objects from the background becomes

even more difficult. Unfortunately, without such

segmentation of potential targets, the detection, the

identification, and the geo-location of these same targets

would have to be carried out on the entirety of the image

frame, increasing the possibility of false detections and

making it even harder to be accomplished in real-time.

In this research, we developed a fast, real-time, and

effective method for segmentation of multiple moving targets

from moving backgrounds. The method relies on the

calculation of a differential optical flow, which can

separate the motion of the airborne camera from the moving

targets. Once segmented out from its background, the images

of possible targets are handled as independent ROIs (regions

of interest). Each ROI can be streamed into the second phase

of the algorithm for tracking and geo-location of specific

targets.

Algorithm overview

The algorithm always requires two images: the current image and

the previous image. As soon as these images are read from disk,

the Phase 1 of the algorithm calculates the Optical Flow of the

current image with respect to the previous one – since the

algorithm runs in real time (30fps) the images can also be fed

directly from a camera. In Figure 2, we depict two typical

images in a video sequence.

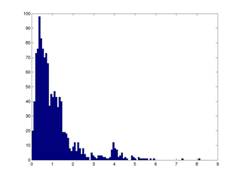

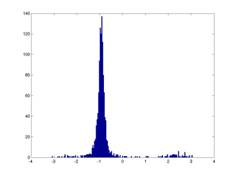

The OF (Figure 3a) is then analyzed and two histograms are

built. The histograms represent the distributions of the pixel

velocities in terms of their magnitude and orientation (Figure

4). Based on these distributions, we can separate the foreground

image from the background. Each foreground blob is them

processed and segmented using a morphological filter and a

component labeling algorithm.

(a)

(b)

Figure 2 – Original sequence: airborne camera moves towards

northwest, while the gray car in the center of the image moves

in the same direction.

(a)

(b)

Figure 3 – Optical Flow, before (a) and (b) after removal of

background motion (but before filtering of spurious flows

in the image).

(a)

(b)

Figure 4 – Histogram analysis of the Optical Flow. (a)

Magnitudes and (b) Angles.

A ROI is defined around each object segmented from the

background and, based on the object’s velocity and size, the

most prominent ROI is streamed into the second phase of the

algorithm. Figure 3b presents a group of blobs clustered by the

similarity of their OF. This is the result obtain before the

morphological filtering and component labeling. Figure 5a

presents the output at the end of Phase 1, with one single ROI

already delineated.

Single target tracking - A dominant

moving target can be segmented out by the method discussed

above. Notice that there is a severe background motion in

the video below.

- When a moving target is found by the phase I processing

(optical flow method), it opens a ROI on the centroid of the

target. Then the phase II processing tracks each object in

each ROI.

Multi-target tracking using threads - The above

approach can be easily extended by using multi threading. That is,

each thread process each detected target. Theoretically, it

is possible to run each thread in different machine, so that

even multi-tracking task can be achieved in real time.

References

-

Han, K. and DeSouza, G. N.,

"

Two Phased Bayesian Filter Applied to Vision Based Geolocation

of Moving Targets",

Journal of Intelligent and Robotic Systems (submitted)

-

Han, K. and DeSouza, G. N.,

"

Target Geolocation from Airborne Video without Terrain Data:

A Comprehensive Framework",

Journal of Intelligent and Robotic Systems (accepted).

-

Han, K., Dong Y. and DeSouza, G.N.,

"

Tracking Moving Objects from Airborne

Video Using Sparse SIFT Flows and Relaxation Labeling",

in the Proceedings of the

2011 IEEE International Conference on Robotic System (IEEE-ICRA) (submitted).

-

Han, K. and DeSouza, G. N., "Multiple Target Geo-location using SIFT

and Multi-Stereo Vision on Airborne Video Sequences", in Proceedings of the

2009 IEEE International Conference on Robotic System (IROS), pp. 5327-5332, Oct./09.

-

Han, K. and DeSouza, G. N., "Instantaneous Geo-Location of Multiple

Targets From Monocular Airborne Video", 2009 IEEE International Geoscience &

Remote Sensing Symposium (IGARSS), pp. IV 1003-6, July 2009, Cape Town, South Africa.

|