|

Calibration of Vision-Sensor

Networks

by

Kyng min Han

Automatic camera calibration of a multi-camera rig

The goal of this research is to eliminate any human

intervention from calibrating multi-camera system. For a

given image data set, the system finds the best subset of

images which optimizes the camera calibration. The algorithm

procedure is as following

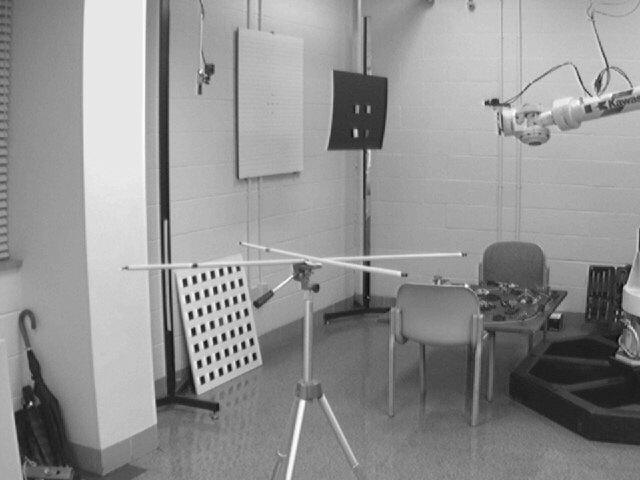

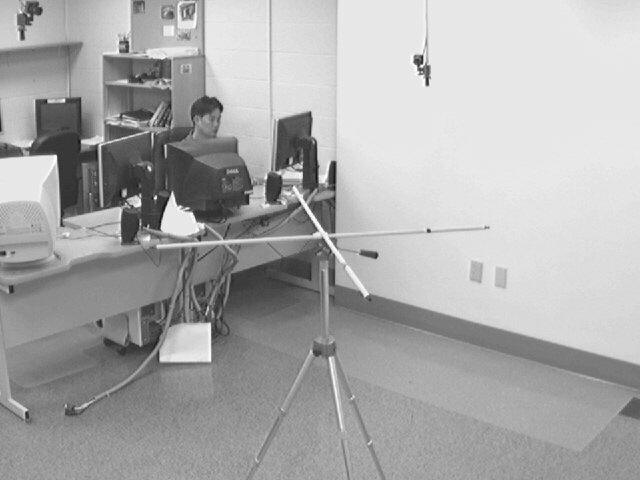

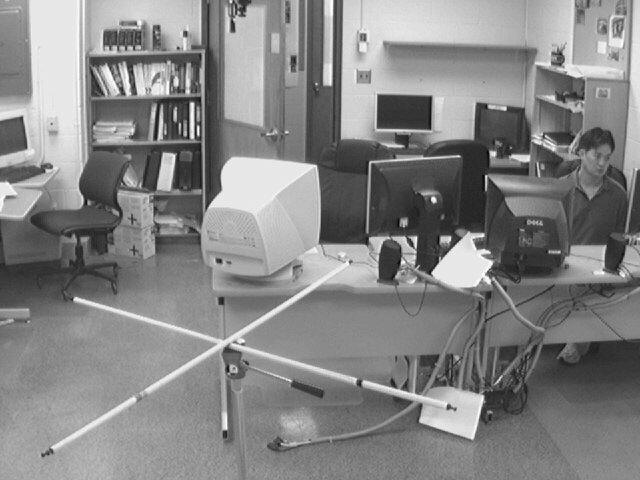

1. Collect images: In the beginning, human just

wander around inside of the camera rig. Each camera grab images

at the same time.

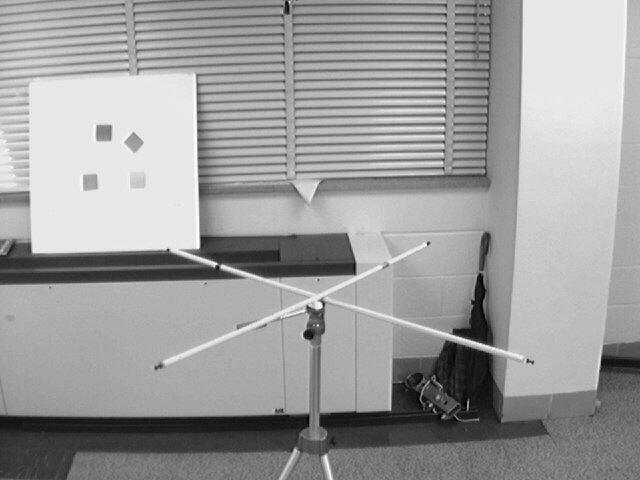

2. Feature point detection: We use Hough

transform to detect lines. Feature points are the intersection

of these lines.

3. Selecting the best input data set: The

proposed frame work determines the best subset of images that

can optimize the camera calibration process (9 sets for each

cameras)

4. Determining a reference coordinate frame:

Once the calibration is done, then the algorithm decides the

reference coordinate frame and a camera path tree. Using this

path tree, one can easily bring image points in some other

camera cameras to the reference frame.

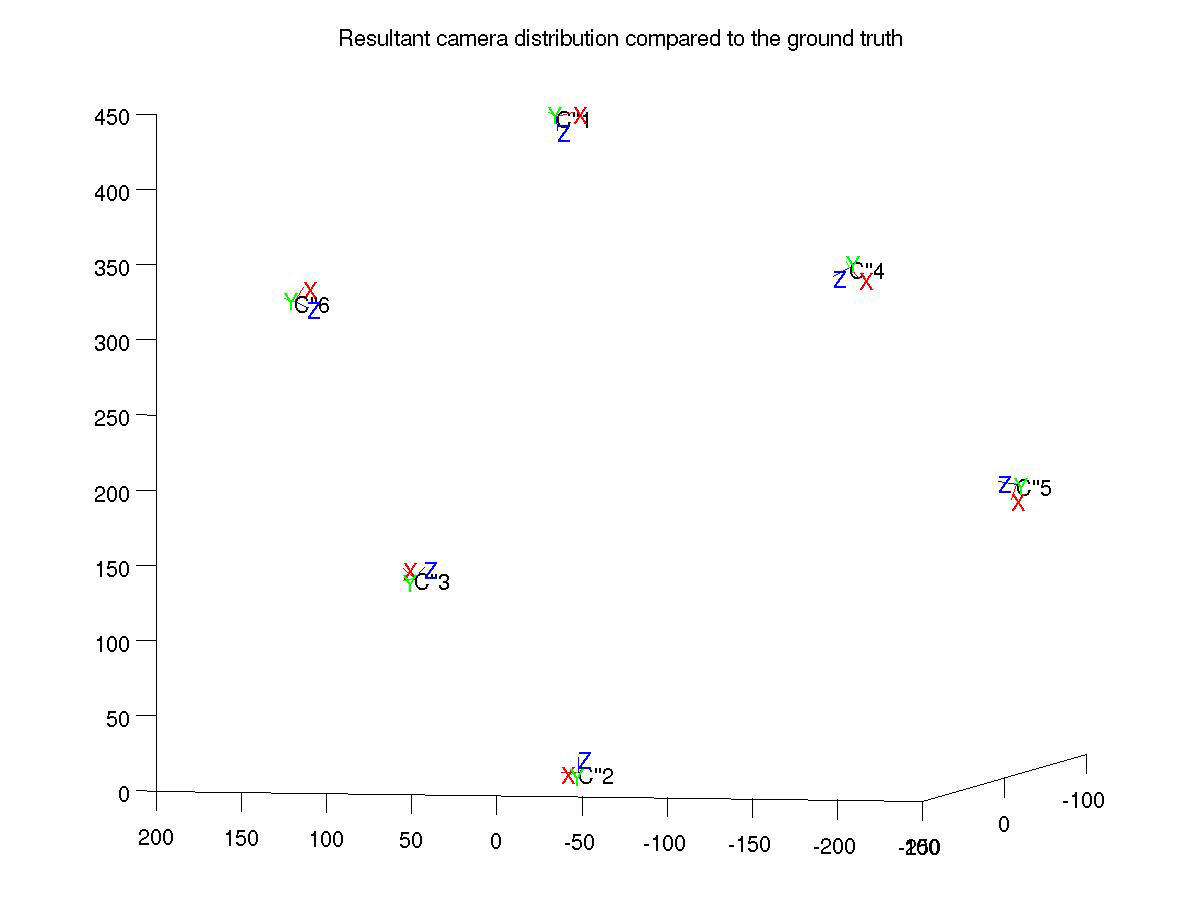

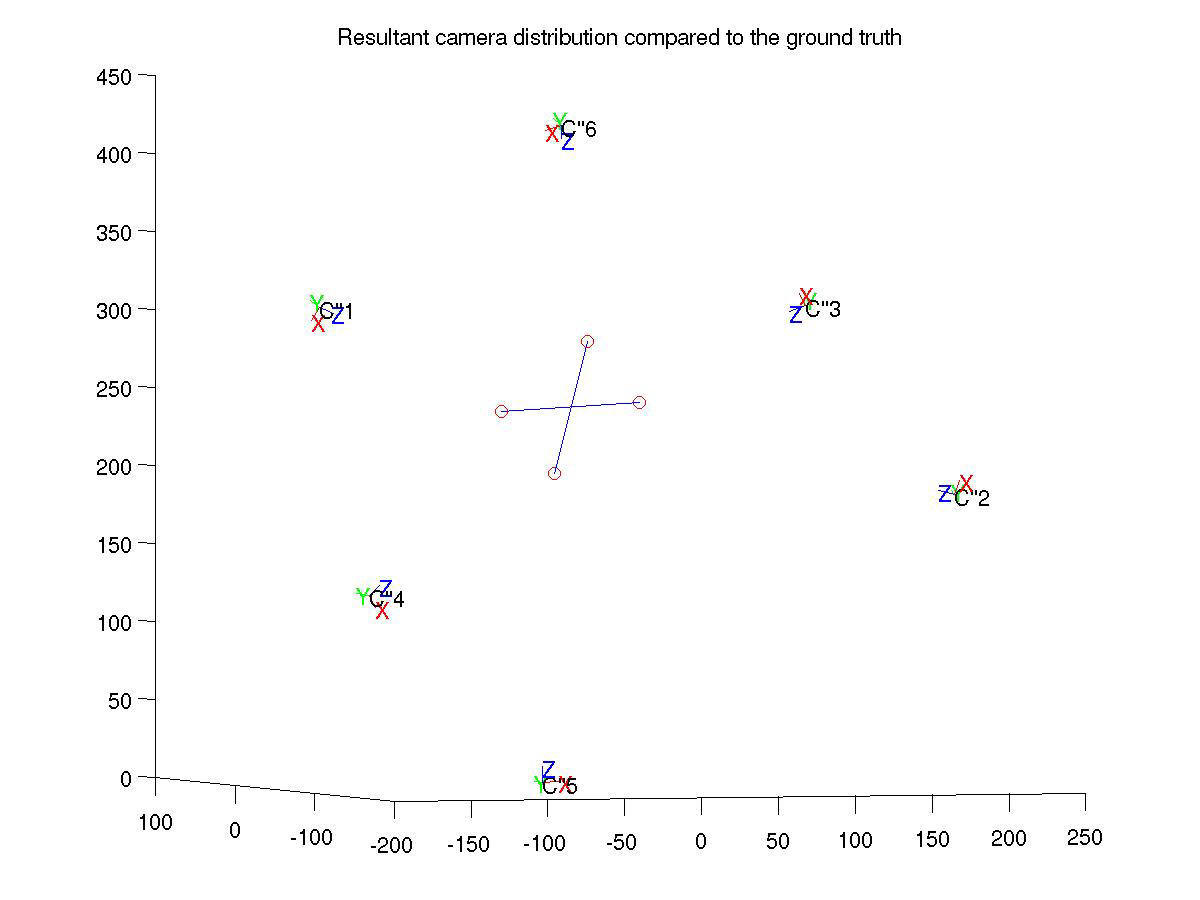

5. Extrinsic parameter test: Above figure

illustrates the real camera positions in our lab (left) and

reconstructed camera frames (right) by their extrinsic

parameters.

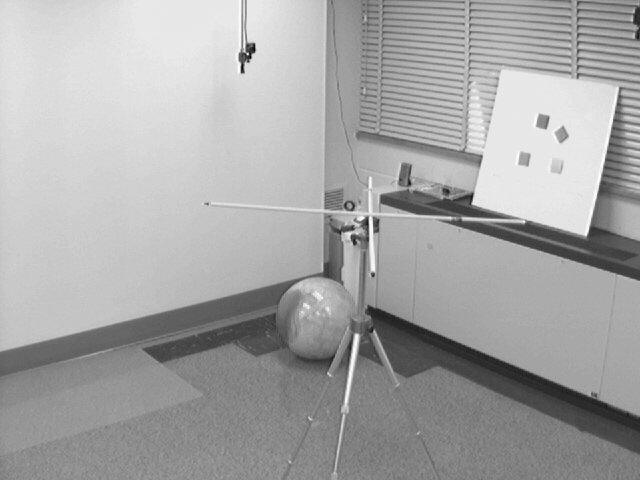

6. 3D Object reconstruction: Here I made a

cross shape like object. The four corner points (Tips of each

bar) are reconstructed.

Object views from 6 different cameras

Reconstructed points (red circles)

Results a) Real Camera positions b) Reconstructed

camera coordinate frames References

-

Han, K., Dong Y. and DeSouza, G.N.,

"Autonomous Calibration of a Camera Rig on a Vision

Sensor Network," in the Proceedings of the 2010 IFAC International Conference

on Informatics in Control, Automation and Robotics (ICINCO), June 2010, Portugal.

-

Han K., DeSouza, G. N., "A Feature Detection Algorithm for Autonomous

Camera Calibration", in the Proceedings of the 2007 IFAC International

Conference on Informatics in Control, Automation and Robotics (ICINCO), pp.

286-291, May 2007, France.

|